(This was not originally planned to be my first article, but the timing is too tempting to miss)

The PS5 Pro is just about out to hit the public, touting a 45% increase in Graphical Compute Power, a proprietary, machine-learning-based upscaling technology, and improved raytracing calculation—presumably supported by hardware.

(Yes, that’s a PS4 controller pictured above, but it was just so moody that I had to use it)

If you’ve been in the enthusiast computer hardware space for a while or are a fidelity die-hard, you probably find this to be a pretty substantial set of upgrades for a mid generation console refresh. However, what about the core console audience? The kind who would never dream of doubling the price of their computer just to upgrade one component? The kind who might still be playing on a 1080p screen and have no qualms playing at 30fps?

Perhaps this is to be expected, as it is a luxury “Pro” model—not a full generational upgrade—but there really is nothing new here for that crowd. It drops the disc-drive to optional—as the slim did; bundles the stand separately; and offers no new ways to play your games. All of its features have been available from the start, just in a reduced form.

Many practical PlayStation fans are probably thinking: “Why not just wait for the PS6? It’s not that much further off.”

And then there’s the software. Microsoft stopped doing console exclusive games years ago, and now even Sony has ported almost every one of their flagship titles of this generation and the last onto PC. The Pro announcement gave no indication that this was going to change, and it means that its selling point is in direct competition with what’s already possible on PC: PlayStation games with even higher fidelity.

Now, the price does make it a better deal pound-for-pound, but I’m going to ignore the economics today and focus on the actual capabilities.

To the core audience, the Pro is nothing new, it is “the same games but shinier.” But since that’s already the selling point of the PC market, its justification is only to provide an option that rides closer to the line of accessibility versus premium fidelity.

This leads to an exasperation of the cultural perception this console generation suffered from the start: what am I supposed to be excited about?

Gaming is an entertainment industry. People want to be entertained. People—especially Americans—like novelty and thrill.

This is not that. It is luxury, and luxury is actually rarely exciting or novel. It is typically very traditional, but refined.

To those of us who aren’t chasing novelty, it’s great, but if that’s what a player wants primarily they wouldn’t be on a console these days in the first place. So why is Sony putting effort into that and not on delivering something new every console launch? Why won’t they make us excited to be here?

When we went from the NES to the N64 we got a whole new dimension of rendering. When we got the Xbox we got internal hard drives and internet access. When we got the PS3 we got multitasking dashboards and digital game distribution. Not to mention the dramatic, immediately tangible leaps in fidelity generation by generation.

What does the PS5 have? Nicer rumble? Nicer graphics? Nicer UIs? We had all of those things before, who cares!? Where is the new!? What does it matter that the processor is faster if it’s not doing anything the last generation couldn’t!?

…

The thing is, though, more raw performance is the only thing modern console designers can do achieve new things on the systems of the day. And that is because all of the burden of innovation has been laid on Software.

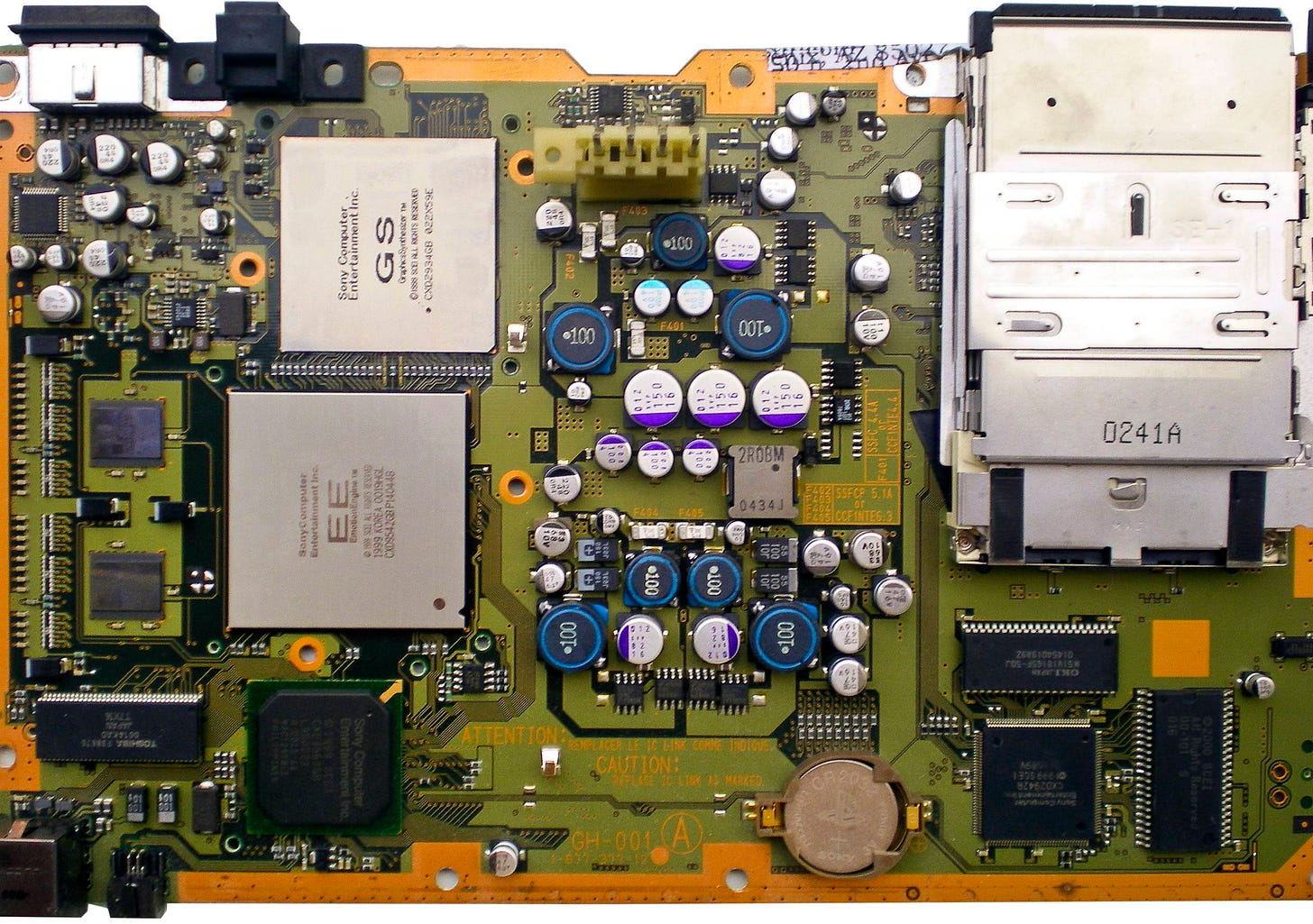

Back in earlier days of consoles, if you wanted a certain graphical feature supported (like 3D geometry, or transparent glass, screen blur effects, etc.) then that support had to be baked into the silicon of the device. If you were an absolute genius of a programmer, you might have discovered a way to leverage existing features for unexpected effects, but that had mathematical limits and in the overwhelming majority of cases what a console could render depended a lot on what the console designers themselves put into the machine. So, you ended up with very distinct visuals for each console.

As a tangible example of what I’m talking about, look the 3D model wobble on PS1 or the fog effects in Silent Hill 2 and how those quirks or features were lost in ports.

This video by Modern Vintage Gamer does a good job of talking about the PS1 wobble effect:

Consoles used to be very opinionated on the look of a game, because they were more directly responsible for how that happened. Did the developer want anti-aliasing in their game? Well, their options for performant methods were whatever Sony or Nintendo etched into the processor. Want the lighting on your model to look smooth and organic? It’s up to the shader model implemented on the device. If you’ve heard about a “Shader Unit” on a PS2, that was literally a circuit wired into the board that determined what color to “shade” a part of a triangle on a model.

Nowadays, however, the hardware doesn’t care what you’re doing. It takes whatever numbers you throw at it and crunches them together however you tell it do so. The “central” processing unit of a device, the CPU, always worked like this. This is why game logic could do whatever it wanted. It is a general purpose programming device that can solve whatever theoretically plausible calculation you throw at it, as long as you give it enough time and memory.

And that time factor is exactly was why consoles do not use it to render anything, and why PCs largely stopped by the early 2000s. Even as the highest clocked—that is, the fastest running—processor in a computer, it is still too slow for calculating the color of each of the thousands or millions of pixels on a display 30 or 60 or more times per second. That requires figuring out the position on the screen of every visible triangle in the game world, determining its color, blending it with potential transparent effects, calculating the shading from lighting, and then adding whatever post-processing after effects the developers require after that to perfect the look.

To resolve this, you make processors that don’t solve every problem. You make them to solve specific problems. I’ll try not to turn this into a full college lecture, but to explain what I’m getting at, we have to get a bit into the numbers:

First, if you’re not familiar, a computer processor operates in “cycles.” That is, they “go” as the electrical power components “pulse” energy into the processor, which moves all of its calculations—its “computing”—forward by one step. Generally a processor can perform one simple calculation per cycle at least, while modern processors have ways of doing multiple, and on the other hand some calculations requested might actually consist of many simpler calculations.

At the end of the day, the processor is an arithmetic solving machine. That is what it does.

In any case, a useful analogy is to think of the processor as a bike, and each “cycle” as one rotation of the wheel. The more rotations it makes in a second, the faster the bike is going.

So now, some numbers:

The PS5, rendering to a 4k screen at 60 frames per second has to calculate the color of about 498 million pixels per second.

To do so, it has a GPU clocked at about 2233 MHz, which is 2.2 billion cycles per second.

Well, wonderful, the processor can do at least 2.2 billion processes a second, and we only have about 500 million things to process a second. That means we should even be able to hit 240 frames a second!

Cool!

In fact, the GPU is rated to have a theoretical performance of 142.9 billion pixels per second once you count in all of its possible tricks to do multiple calculations per cycle.

A 4k screen is only a mere 8.3 million pixels, so that means…

It should be capable of 17,228 frames per second!

Wow!

Except in the real world we know that often we don’t even hit 60 fps on the PS5. Many games settle for 30… Why? If the GPU can fill that many pixels in a second why aren’t we even getting a hundredth of that performance?

It’s because that naive theoretical fill rate assumes a screen pixel is a simple calculation—but it is not: the GPU has to look up textures in memory, do complex math to figure out the geometry of the scene, composite multiple layers of intermediate representations, etc. All those effects I talked about earlier.

We can work backwards to find out how many pixels the GPU is actually drawing every “cycle” at a given resolution and framerate. So, at 4k 60 it comes to about…

0.22 pixels per cycle, or roughly 4 and a half cycles per pixel. And that’s working out the math as though the GPU were handling them all one at a time rather than in parallel.

Okay… So the PS5 is rendering at about 0.3% of its advertised pixel fill rate and 22% of its naive cycle-for-cycle projected fill rate. Well, whatever, the numbers all sounded made up anyways. The important part is that it’s better than old hardware, right?

The PS2 must be WAY less efficient… right?

Well, on a 480p CRT at 60 fps, my math shows that the PS2 was working slower at around ~0.125 pixels per second. So only half the rate—cycle for cycle—than a system almost 20 years newer than it.

Half. Just. Half.

“Okay,” you more math minded viewers might say, “You’re basing that on 1/14th the amount of pixels to fill. 480p is way smaller. If you use the same screen size, the PS5 is around 28 times faster.”

You would be right, but note another interesting number: the PS2’s graphics processors were clocked at 150 MHz, or about 1/15th the speed of the PS5’s 2233 MHz.

So, if somehow you clocked the PS2 processor up to the same speed it would be pixel-for-pixel equivalent to the PS5 running a game at 30 frames per second: a very common framerate these days. And that would be 4k native.

HOW!?!

This is a very complex problem, but after studying the hardware I believe I have an answer to one major factor: hardware rendering. The PS2 is arguably mostly hardware rendered, while the PS5 is mostly software rendered.

“Wait, I’m a graphics programmer,” you might say, “and that doesn’t make sense! GPU rendered graphics are hardware rendering!”

Yes and no. GPUs of today are fundamentally different from the graphics chips of yore.

That thing I said about the central processor being too slow for rendering because it’s made for general problem solving? Well, a modern GPU is actually also a General Purpose Processor—a GPGPU to use the technical terminology—and it’s equivalent to HUNDREDS of very pared down CPUs clustered together to run in parallel. This is actually really cool and makes some amazing things possible—beyond just graphics—that you physically can’t do with hardware based renderers. And, it means the same code can run on multiple different physical models of GPU without the game developer having to lift a finger.

There’s a little more to the history of GPU hardware that I won’t explain here, but suffice to say, without this kind of hardware design, the gaming PC industry would look a lot like consoles. In fact, I would go so far as to say that GPUs are the only reason why consoles aren’t the most powerful gaming systems on the market.

In older generations of hardware, if you wanted to support a new rendering technique, new hardware wasn’t just nice to have, it was often mandatory. And that applied to graphical effects that are now so ubiquitous that you wouldn’t even think about a game engine not having them, like see-through glass.

Yes. Glass you can see through. One of the primary purposes of glass.

Now, with modern GPUs, developers are have the ability to try all manner of methods to render all manner of things. There are almost no hard limits to what can be done.

The tradeoff is that it’s now on the developer of the game engine to support the features the artists want, and its especially on them to make them performant, so the amount of work the developer is actually responsible for has gone up in proportion to the power they now wield.

To go back to the bike analogy, we’ve traded the bicycle for a motorcycle, but now we’re also driving in zig-zag line while popping a wheelie on the way there.

Three engines can implement the same feature and end up with three completely different performance characteristics. And then even with the same engine, what works in 2-4 cycles on one GPU might take 12-16 on another, and on another it might run fine 99% of the time but thrash memory and grind to a halt on that unfortunate 1%.

Ironically, this almost defeats the whole purpose of the “write once, run anywhere” promise of modern graphics programming—and programming in general. But, to gloss over the details, this setup is actually rather robust to error, so you generally get performance degradation long before you get a proper “break,” so you can scale around the problem more often than not.

Does the next generation run that code 10% slower per processing core? Well, add 20% more processing cores and now its faster overall! Sometimes, that actually is the best strategy because the new way of doing things lets you add not just 20% but 30, 40, or even 100% more cores.

Still, as games get more ambitious, and as the generations of technology progress and add more tools to the belt, this leads very naturally—but maybe not ideally—to further division of labor and thus the rise in 3rd Party Game Engines.

If there’s now a nearly uncountable number of real platforms to support and theoretically infinite number of rendering features you could implement, why not rely on a group focused entirely on doing that work to the modern standard, and then the actual game developer can just focus on the game?

This is not a bad idea, as long as the engine is managed well (another topic for another day) but it has the tendency to erase whatever actual differences still remain between consoles.

Does the PS4 have a touchpad on the controller? Neat! No one cares, because the Xbox and PC don’t, so we’re not going to waste developer time on a minor feature that we can’t duplicate on all of the platforms we support.

That leads us to the crux of this video and the practical reality that new consoles don’t really mean anything to developers other than what performance headroom they bring to the table. No one but the dedicated first party developers and a few crazy people are programming their games for the consoles themselves because the potential benefit is so minimal.

Unless the console can provide a cutting edge feature SO incredible that it would double sales just by existing, or it can reasonably find support on other platforms, it’s very rare that the feature will ever get a moment of thought by 3rd party developers.

To go even further than the main console, everything has a USB port, which is a CPU driven and general purpose way to support peripherals, so there’s no actual limit there generation by generation either… except… performance headroom.

Those fancy new Dualsense controllers? Yeah, those could work on PS4 if Sony wanted.

Going back to graphics, the features that do get different levels of support between platforms do so because the differences between platforms is little more than a slider or number change. Textures are already made in high quality when the artist works on them, the PC players just get a final product that’s less compressed than what ends up in the console build. Anti-aliasing levels are a single number change. Lighting engines are all about scaling the number of active lights and how many times rays bounce. None of those require fundamental differences… most of the time.

Ray Tracing, however, was a little different. Ray Traced lighting engines are a feature which requires so much raw compute power, that supporting it with only software is realistically impossible on the processors of the day unless you make major concessions in how you use it.

Generally these concessions are using it only for a few minor parts of the render where it’ll have the most obvious impact, like reflections on mirror surfaces.

What makes full ray tracing worth it over the “lesser” version is yet another topic for another day, but let’s assume it is for now, and then know that the only real way to achieve that level of compute output right now is through our old, forgotten friend: hardware support.

That’s right, we’re going forward by going back. A real engineer knows that no methodology ever dies, its use cases just change. In any case, this is what Nvidia did to get PC nerds to never shut up about their graphics cards: they made hardware specifically for the most computationally heavy process in graphics.

And that’s what AMD—and thus the PS5 and Series X—did not do. Their cards are still mostly composed of general purpose processing circuits. AMD did cobble together some tricks to kind of catch up, so they are capable of some of those limited forms of ray tracing, but game developers were still left with a choice on what to support and they of course opted for the lowest common denominator. Consoles are a large market, and Nvidia users still get the advantage of being able to run those reduced forms better than everyone else, so they can keep bragging even if it’s not even half the result Nvidia was going for.

Upscaling technologies followed a similar story on the same timeline, with the difference being that the race was to find a method that made everything else faster without sacrificing much visual quality.

Which brings us back to the PS5 Pro, because it seems to actually finally be doing that thing consoles had stopped doing, which is to have hardware support for “new” features. It was “technically” possible before, but the number of concessions that had to be made to make it work were significant.

But were those two things it? Ray Tracing and Upscaling?

You hardly hear developers asking for anything specific—and I say that as a developer. All we know to ask for is more headroom. Everything else is software, and thus our problem. Lighting is a SIGNIFICANT portion of the rendering equation, so if that’s solved, what else is there to do?

Do we actually ever need fundamentally “new” console hardware?

…

YES

These performance efficiency metrics are abysmal! Terrible! AWFUL!

The PS2 should not be comparable to the rendering efficiency of the latest hardware! We should be making that thing look like it was having to harness the thermal energy of an entire sun to run at 480p! We shouldn’t be spending the better part of the afternoon moving a texture in and out of cache! We shouldn’t be having to rely on an upscaler to guess 3/4ths of the pixels in a frame just to hit 4k 60!

I lied when I said the PS2 was half the pixel fill rate. THE PS5 CHEATS TO GET TO 4K 60! EVERYTHING IS CHEATING. ALL OF THE PIXELS ARE FAKE. Which is fine, but, IF WE’RE GOING TO CHEAT WE SHOULD GET MORE OUT OF IT.

Developers don’t need so much freedom and we don’t want it! We don’t care how anti-aliasing is implemented, we just want it to work (I mean, I care, but I’m in a minority). That’s why so many games are made in Unreal. Most studios do not have the resources to research three different shading methods to figure out which one they want to support in their game. They want to shove a model into the engine, call the “render” function and badda-bing, badda-boom, your knock-off 2B is dancing on screen.

The PS2 is fondly remembered for having a great API with great documentation that developers could get up to speed on quickly. The PS3 was the one infamous for having alien hardware, but the PS2 really wasn’t much less quirky. But—as the legend goes—with the PS2, Sony made it clear and intuitive how to harness its weirdness.

And greatness came out of it.

Now the industry is trained on Unreal and Unity, but those have turned into giant, confused hydras with so many foot guns you’d think the CIA was funding them.

Again, another topic for another time.

Well, I say, if most of the industry is going to be using the same formulas for rendering, lets punt as much of that implementation back into the hardware layer as we can get a real benefit from.

Might that lead to console games having that same “Unreal Engine” sameness effect that people bemoan? Maybe. But just look at how fondly everyone looks back to old consoles. That’s actually an upside for consoles. Games made for your system look like they were made for that system.

What I dream of is for a console make to say, “Hey, the industry has come up with a lot of pretty great tools already. Let’s pick a set of our favorite and double down on them. Let’s become the best at using them. Let’s weave them into the very essence of our system. Let’s design and iterate until we reach that ‘advertised pixel fill rate.’ And then let’s use that mastery to build a system so efficient that it makes the bloated PC hardware space quake in its boots and become the unquestionable living room standard that everyone wants to support.”

Apple has already shown that it’s possible. Watt-for-watt, their M-series processors are punching many classes over their weight in the desktop computing environment. There are a lot of logistic hurdles to do the same in the gaming space: after all, chip manufacturing has also changed fundamentally since the PS2 days. But could Sony have worked with AMD to get something with at least a bit more character in the PS5? Obviously. They’re doing it now.

Innovation in the console space will never look quite like it did in the golden age. When we get the next NES to N64, it probably won’t happen at the launch of any specific console (looking at you, VR). Computer technology really is just too mature for that to keep happening. There aren’t the same obvious chasms between what we can and cannot do which made the changes of yesteryear feel so dramatic: the mine of novelty is running dry.

But… the space for refining what we have is immense and I think it will take many new consoles more before we’ve reached the limits there.

And if you still really need someone to blame for why gaming doesn’t feel exciting anymore, you can still blame the corporate side of the industry. It’s still their fault, just in a different way than you might have thought.